There’s nothing to Like about the flood of AI-generated photos.

Key Takeaways

- AI-generated photos have flooded Facebook, often going unnoticed due to improving technology.

- Not everyone can spot an AI-generated fake, and the arrival of AI-generated video will pose a brand new problem.

- Fake AI-generated content is prevalent on all social networks and resources like Google Image, posing a web-wide issue.

The spread of AI-generated content is a tidal wave that can’t be stopped, and social networks like Facebook are full of it. So how can you spot this content, and why is it shared in earnest?

AI-Generated Photos Are Taking Over Facebook

It takes seconds to generate an image using tools like DALL-E or Midjourney, and these tools are improving with each update. While some people can easily spot AI-generated photos, others are less likely to question what they see appearing in their Facebook feeds.

“Seeing is believing” no longer rings true. This has been an issue ever since the days of early Photoshop fakes. But while there are some tell-tale signs that an image has been manipulated by hand, it can be a lot harder to pick out an AI-generated photo.

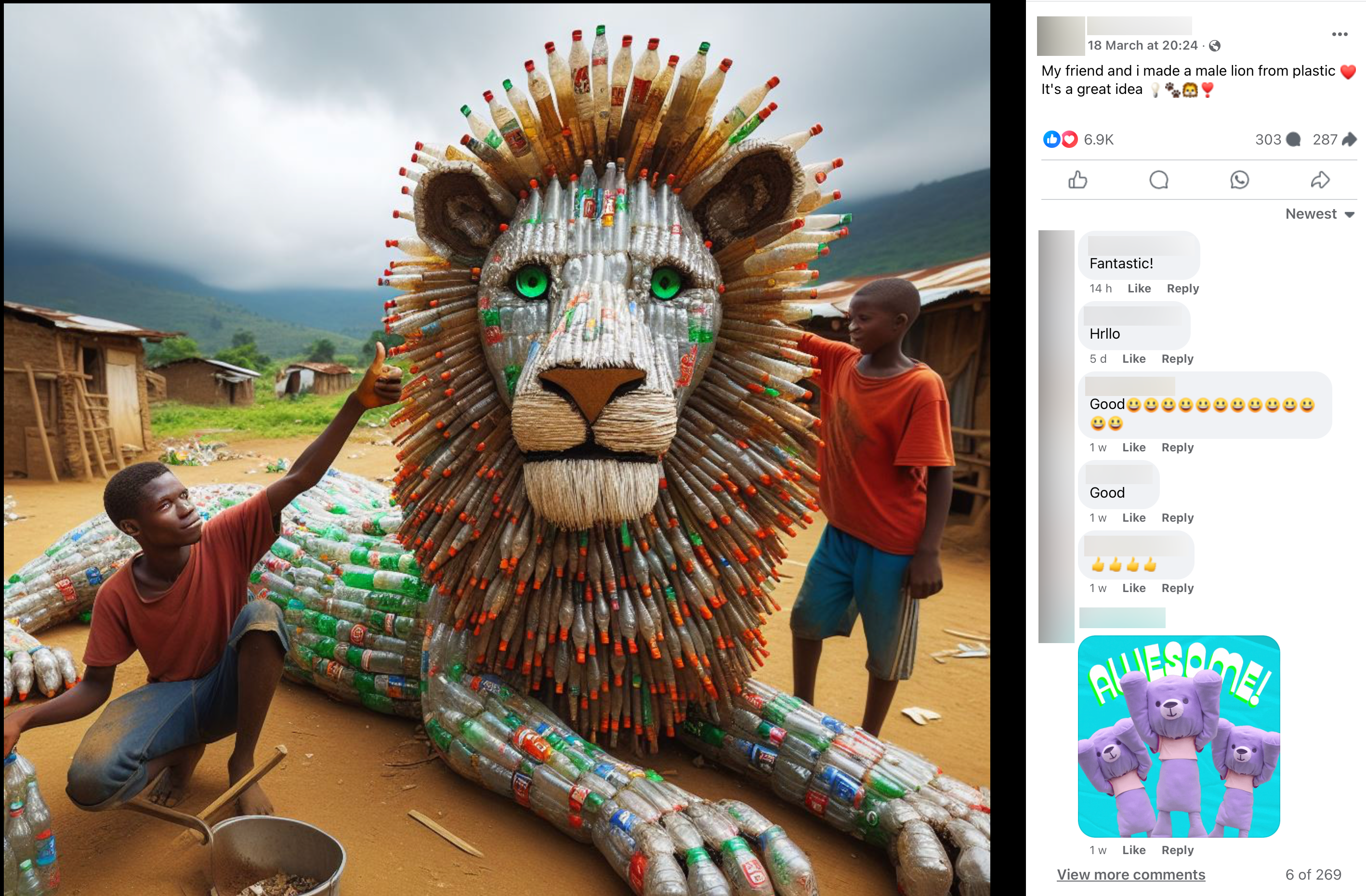

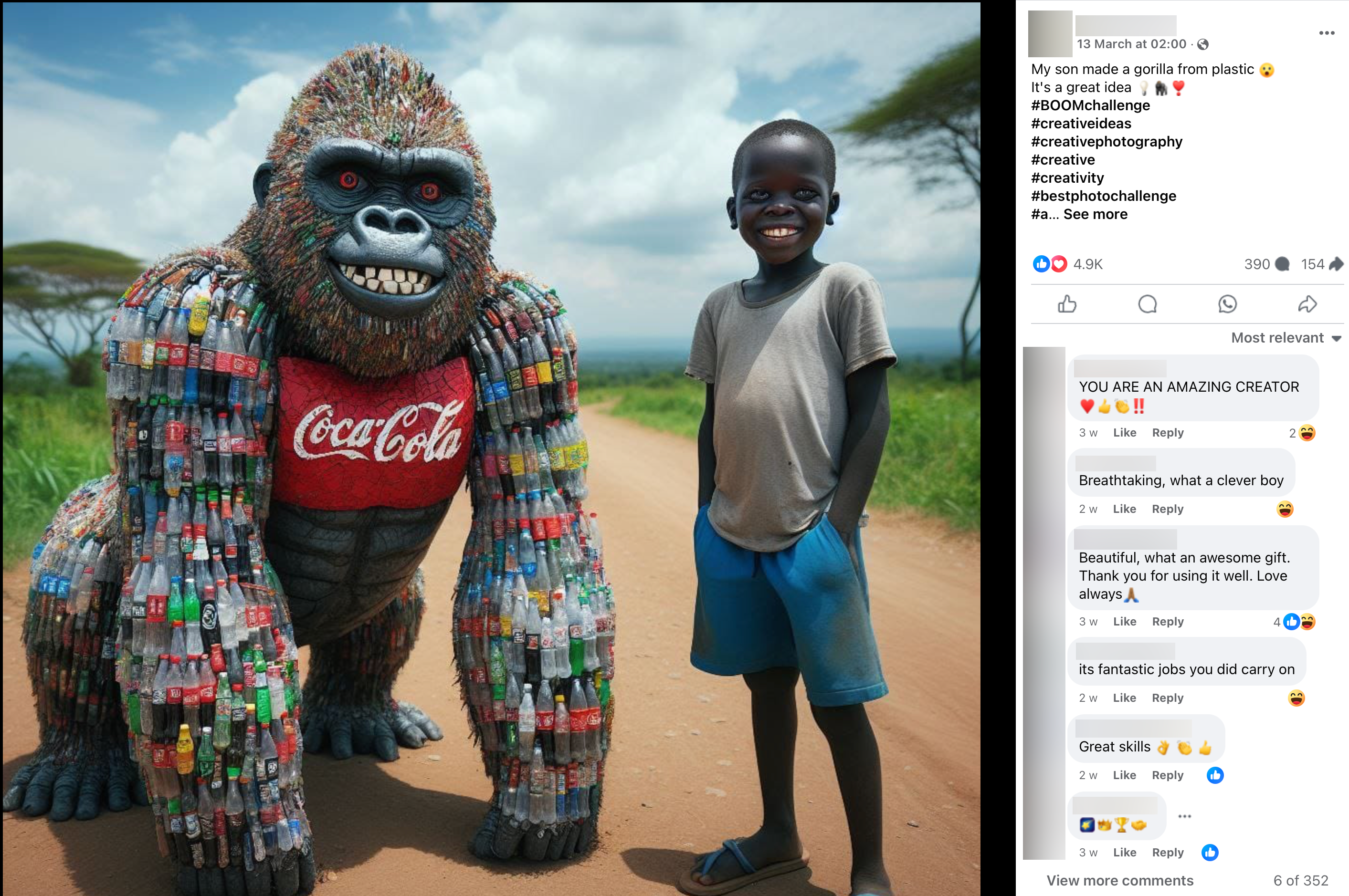

In early 2024, a wave of images hit Facebook that centered on an odd theme: plastic bottles. These images commonly featured an AI-generated photo of an object constructed entirely of plastic bottles, alongside the person (usually a child) responsible for its creation.

This craze appears to have started within Facebook groups, and many of the statues are religious in nature. Here’s an early example:

But the meme soon caught on, with many pages posting and re-posting the same or similar content, seemingly in earnest. These images were often accompanied by a “my son made this” style caption, and featured ghoulish AI-generated characteristics (to add to the questionable overall theme).

Engagement is the primary motivation for posting these images. They resonate with a specific crowd, notably those who don’t immediately spot them for the fakes they are. People engage with the content by leaving comments or reactions, further boosting the post’s reach. Facebook is also awash with bots, compounding the problem.

This plays into the way content is disseminated on networks like Facebook. The more engagement a post gets, the further it spreads. For some pages, this reach represents an opportunity to make money with sponsored posts and affiliate links, while for others the act of being in a position of influence is enough.

Spotting the AI Fakes

We’ve covered the basics of spotting AI-generated photos in the past, but it’s not a perfect science. There’s no single tool you can use to “scan” an image, and not every photo has the hallmarks of a fake. That said, there are a few basic things you can keep in mind.

Given the state of current technology, AI is far from perfect. Much of the time, there are problems with images that have been AI-generated. In particular, finer details like fingers, held objects, spectacles, and hair can give the game away. For inanimate objects, look at thin details like fence posts, window frames, and the way materials intersect.

As you can see from some of the plastic bottle abominations dotted throughout this article, many AI-generated images have a “look” or an “aura” to them that’s hard to place. It can be an “uncanny valley” effect where faces look inhuman. Lighting can be flat and mismatched, subjects may seem to glow as if they were lit from another source, and objects don’t look right when placed next to each other.

In the case of the plastic bottle statues, none of the logos are properly formed. They all look like vaguely-recognizable brands, but none of them read as you’d expect. The way the bottles intersect in the sculptures often makes no sense. Plastic bottle construction is a real thing (they’re sometimes used to create greenhouses), and they don’t look anything like this recent batch of AI fakes.

We’ve long been told to question what we read online, particularly on Facebook. We’ve come to accept that it’s normal to question news stories, fundraising requests, fake job adverts, potential scammers, and so on. It’s time to extend that to visual media as a whole and to maintain a healthy level of skepticism.

Video Will Be Next

Computer-generated imagery, or CGI for short, has been catching people out for decades. These are the effects you see in movies, hand-crafted by humans and blended with real footage to create cinematic magic. CGI has also been extensively used to create convincing but fake online videos.

AI-generated video is a bit different. It doesn’t rely on humans crafting an illusion and blending it by hand, but models that are trained on real footage to create video clips from prompts. Though convincing-looking AI-generated video is in its infancy, models like SORA from OpenAI are pushing the technology forward at an alarming pace.

On the plus side, AI-generated video cannot escape many of the problems that plague AI-generated photography. It’s possible to spot AI-generated video, but as the technology improves it will get harder. It’s also a new frontier, one that many people won’t think to question.

We’ve been Photoshopping images for decades, but video is much harder. With video, it won’t just be animals made from plastic bottles and impressive yet unlikely feats of engineering, but real people, mock news reports, deceptive “official” announcements, and much more.

It might soon be time to go back to “Facebook Classic,” where you only use the network as a tool to stay in touch with people you actually know. Soon you might not be able to trust your daily dose of funny, inspiring, or cute photos and videos. You’ll find this option under the “Feeds” menu by selecting “Friends.”

It’s Not Just a Facebook Problem

It feels unfair to single out Facebook, but the world’s largest social network just happens to be a hotbed of AI-generated tomfoolery. It was always going to be this way, as page admins scramble for Likes, followers, reach, and that all-important engagement.

Google Images is also inundated with AI-generated images. Artists are lamenting the loss of reliable reference material since an onslaught of AI-generated photos hit the web a few years ago. Hobby crocheters are sharing tips about avoiding misleading patterns that don’t exist.

Bloomberg has a story and quiz about the proliferation of AI-generated images posing as real photographs that appear in Google results. Sometimes AI-generated images appear alongside searches for famous people and events, potentially distorting reality.

We’re on the precipice of an internet that’s full to the brim of lies. This is a web-wide problem, affecting every social network and platform. AI image (and soon video) generation makes it easy to create content for platforms like TikTok and Instagram, with naturally articulate text-to-speech generators further speeding up the process.

It’s worth taking a moment to think about what this means for the future of the internet. The dead internet theory hypothesizes that most of the content and interactions we see online are no longer human. Even if this isn’t currently true, it’s not hard to imagine a future where it is.

For now, maybe it’s time to have a word with friends and family if you see them liking, commenting on, or sharing content that you know to be AI-generated. There’s no stopping it, but more people are becoming aware of the problem and that’s an important first step.

If you’re a prolific Facebook user make sure to educate yourself about common scams and Facebook Marketplace tips and tricks too.

دیدگاهتان را بنویسید